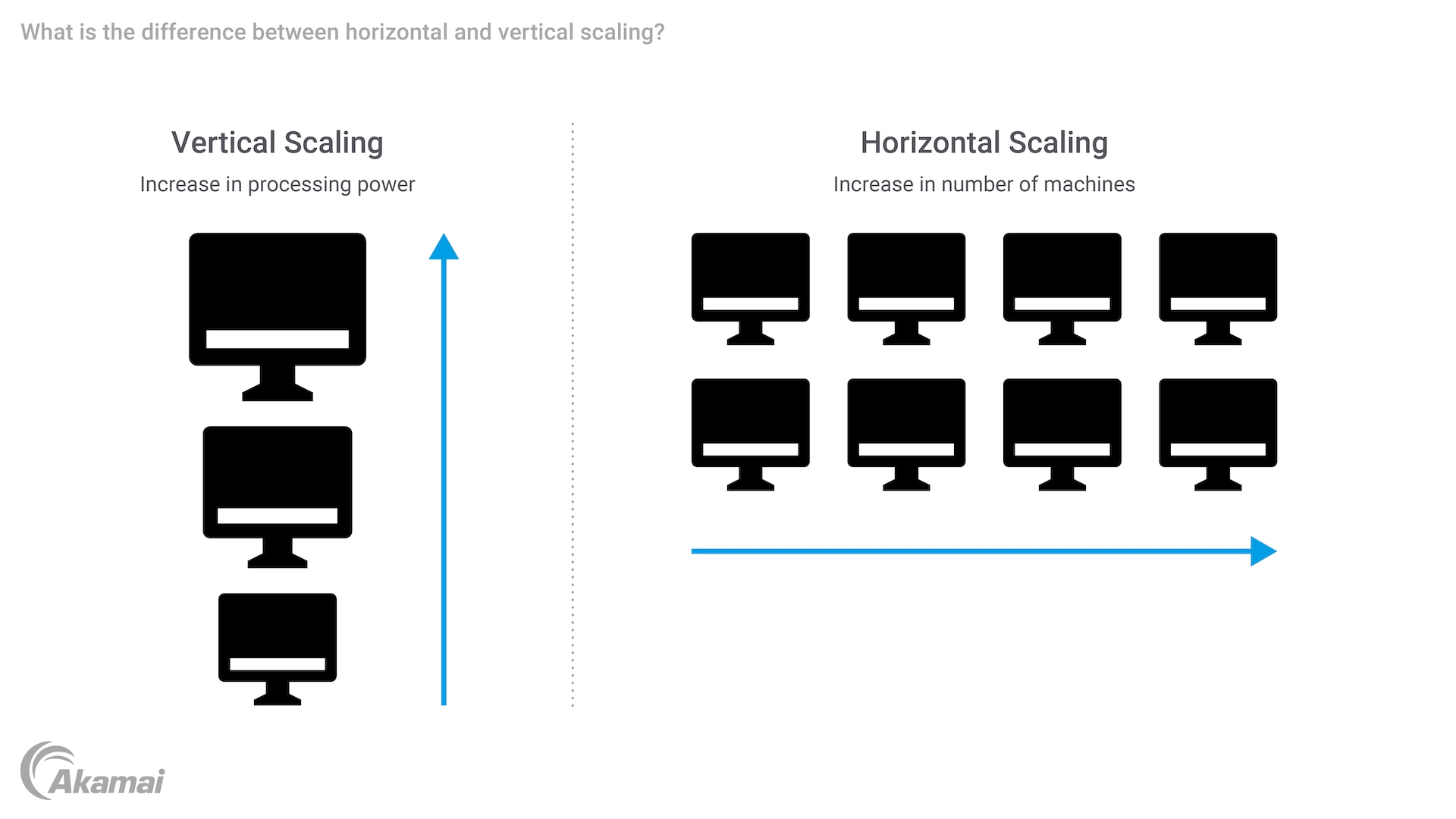

APIs are typically designed as stateless, meaning they do not store request data or retain information between sessions. This stateless nature is crucial when considering scaling methods. Stateless APIs do not require data replication across instances, making horizontal scaling more efficient and easier to implement. System admins can add or remove VMs as needed without affecting API operations, as the API client does not rely on specific server instances to function.

There is nothing wrong with being stateful. Indeed, it may be essential to the desired functioning of the app. However, it is significantly more complicated to execute horizontal scaling for a stateful app. Doing so would require copying stored data from the original version of the app to new instances.

A stateless app or API, in contrast, is one that does not store request data. It does not hold onto session data in memory. Each time a session starts, it’s as if the app is meeting the client for the first time. After the session is over, it’s “goodbye,” with no memory of the session.

Horizontal scaling is possible for a stateless app because it doesn’t matter which VM is responding to API calls. The API client can call on an infinite number of VMs hosting the API, and it will never matter. System admins can add or remove as many VMs as they want without affecting the operation of the API.