AI in Cybersecurity: How AI Is Impacting the Fight Against Cybercrime

Contents

The changing landscape of AI cybersecurity

Artificial intelligence (AI) is rapidly transforming nearly every industry — and cybersecurity is no exception. AI’s impact on the field is two-fold: On one hand, cybercriminals are using AI to conduct more sophisticated and targeted cyberattacks; on the other hand, AI is driving significant advancements in cybersecurity defenses, enabling security teams to identify and respond to attacks with greater speed and precision than ever before.

In this blog post, we’ll explore this transformation in cybersecurity by delving into the ways in which cybersecurity experts are using AI to respond to more sophisticated and targeted attacks.

Fighting the ongoing battle of digital vulnerabilities with AI cybersecurity

Cyber vulnerabilities have significantly increased in recent years, and the rapid evolution of artificial intelligence is playing a key role in this exponential growth. AI enables cybercriminals and hackers to exploit vulnerabilities more effectively, avoid detection, execute more sophisticated attacks, and scale their operations.

The rise of AI cyberattacks: How hackers use AI to breach security

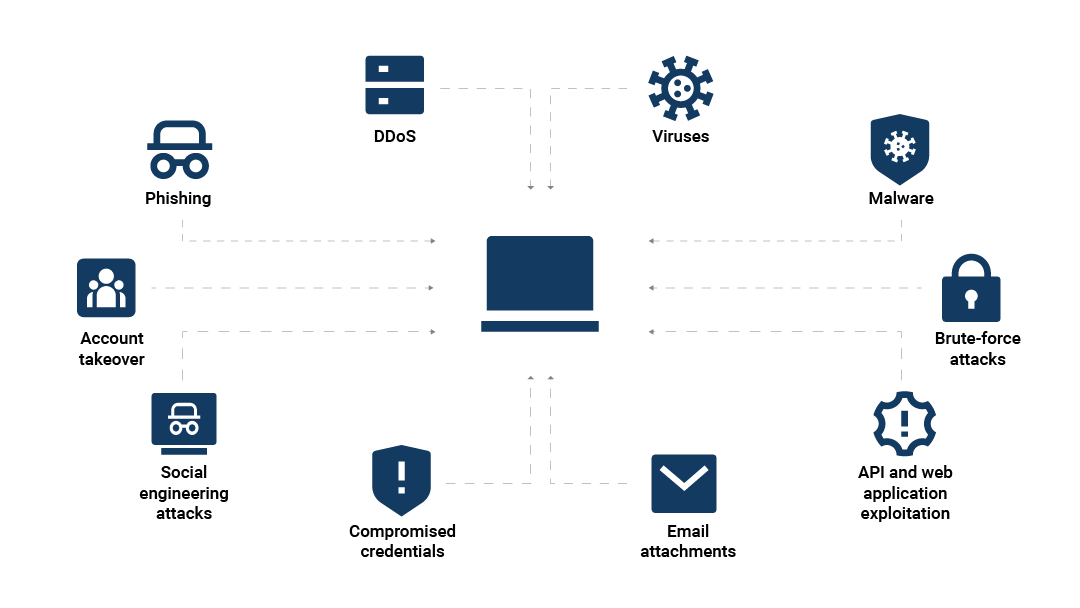

Figure 1 shows the key ways that AI can amplify cyberattacks, including:

AI-powered phishing attacks

Malware and ransomware powered by machine learning

AI in social engineering

AI-powered phishing attacks

A phishing attack is a type of cyberattack in which a cybercriminal tricks an individual into disclosing sensitive information by posing as a trustworthy source.

AI-powered techniques such as deepfakes and advanced AI-driven impersonations have made phishing attacks more realistic and personal — and increasingly difficult to detect. For example, phone calls in which the caller impersonates someone else have been happening for decades — but with AI, the attacker can include the personal information of someone close to the target.

In AI phishing attacks, cybercriminals use various AI learning models, like behavioral analysis, voice cloning, and natural language processing (NLP) models, to create hyperrealistic AI-generated cyber impressions. By using these technologies, attackers can convincingly mimic the appearance, voice, or even writing and speaking styles of friends and colleagues, making it easier to deceive their targets and increase their success rates.

Deepfakes are the latest tool in AI-driven phishing

One increasingly common application of AI in cybercrime is the use of machine learning to create media deepfakes such as videos, images, and audio that appear authentic but are entirely fabricated. The rise of deepfake technology in cybercrime is a growing threat, and the consequences of falling victim to a deepfake phishing scam can be devastating.

In early 2024, an employee in Hong Kong joined a video call with scammers who used deepfake representations of his coworkers to convince him to transfer US$25 million in company funds to the scammers.

Bot operators using AI to pivot more quickly after being detected

Bot management has always been a cat-and-mouse game: Security companies build better detections; bot operators learn how to evade those detections; the security companies create new detections; and the pattern keeps repeating.

AI lets the bot operators drive behavior in more sophisticated ways to reduce the amount of time needed to evade new detections, use more adaptive attack methodologies, and more closely replicate human-like engagement. Indicators of a bot operator leveraging AI include:

Rapid evolution of tactics – Attack methods shift quickly in response to detection.

Increased variation in behavior – AI-driven bots can use techniques like automated fuzzing to explore permutations to try and bypass defenses.

Human-like interaction mimicry – This involves advanced imitation of real user behavior, including randomized patterns in clicks, keystrokes, or mouse movements.

Malware and ransomware powered by machine learning

AI-driven cyberthreats come in many forms. In recent years, the rapid AI malware evolution has enabled hackers to develop more evasive, adaptable malware, which significantly enhances the effectiveness of malware and ransomware attacks.

Ransomware, a type of malware that encrypts a victim's data and demands a ransom for its release, has become more formidable with the integration of AI. Cybercriminals now can use AI in ransomware attacks to avoid detection and execute faster, more complex attacks.

AI-powered adaptive malware allows hackers to bypass security measures more effectively. By analyzing network traffic, this type of machine learning malware learns how to mimic legitimate user behavior and can alter its actions, communication style, and code to avoid detection by traditional security systems.

Additionally, machine learning algorithms enable attackers to more precisely identify vulnerabilities in security systems, target the most valuable data, and tailor encrypting methods to the specific characteristics of a system, making it harder to decrypt the stolen information. Some ransomware attackers also use AI to automate certain tasks like encryption to accelerate attacks and leave victims with less time to respond.

AI in social engineering

In social engineering attacks, attackers rely on psychological manipulation and deception to obtain sensitive information or assets from their targets. By using AI in social engineering, attackers can create more personalized scams that are more difficult for individuals to identify.

AI analysis enables cybercriminals to concoct highly personalized social engineering scams. By peppering phishing emails and other communications with personal details, recent events, and emotional triggers gleaned from the target’s digital footprint, attackers can craft communications that feel remarkably genuine and can more easily gain trust.

Attackers are also increasingly using conversational AI in cybercrime to scale their efforts, making cyberthreats like AI-powered chatbot scams all the more common. AI chatbot models can now mimic human conversation with impressive accuracy, crafting persuasive arguments and learning to exploit emotional cues. By relying on AI chatbots for cyberattacks, attackers can automate interactions and free up their time to target a larger number of victims without having to engage in direct conversations themselves.

The role of AI in threat detection and security

While it's true that AI has the potential to enhance cyberthreats, its potential to improve cybersecurity is equally significant. By applying the power of machine learning, threat-hunting AI technologies can help organizations detect and respond to threats with greater accuracy and speed than traditional measures.

A few use cases for AI in enhancing security systems include:

Elevating threat detection with AI

AI-driven cybersecurity tools, powered by machine learning algorithms, can analyze vast amounts of data in real time, learn patterns, and identify anomalies that could indicate potential threats. The speed of this analysis enables cybersecurity professionals to detect security incidents faster and more accurately than ever before.

Advanced machine learning AI tools can also help security professionals identify notoriously difficult-to-spot threats such as lateral movement. Lateral movement is the tactic attackers use to navigate through a network undetected, identifying high-value targets and expanding their access.

For example, Akamai uses graph neural networks (GNNs) to detect potential lateral movement within a network. GNNs first learn the typical interactions among a network’s assets and then use this knowledge to predict future interactions. If an asset's behavior significantly deviates from the established pattern, it is flagged as a potential indicator of lateral movement.

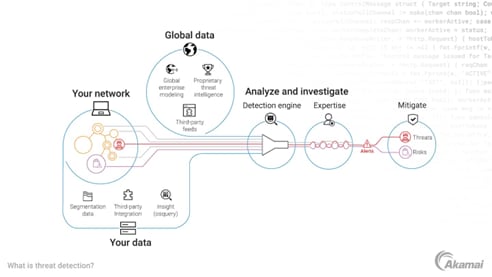

Figure 2 illustrates how Akamai uses AI in its Akamai Guardicore Segmentation and Akamai Hunt cybersecurity services.

Prioritizing threats and automating responses

Another pivotal role AI plays in enhancing security operations is by enabling automated incident responses, significantly improving response times compared with manual human intervention. By accelerating the response to threats, AI-automated incident response helps organizations mitigate potential damage before an issue can escalate.

Here’s how it works: Once a threat is detected, AI-based systems automatically execute predefined actions to neutralize the risk — such as isolating an affected asset or blocking malicious traffic from entering a network. Additionally, machine learning AI algorithms assess and determine the severity of threats and prioritize responses based on urgency.

Because of their incredible speed and ability to address multiple threats simultaneously, AI systems offer organizations a more efficient and effective approach to risk management by allowing them to address new threats in real time, at scale.

Enhancing predictive threat intelligence

Cybersecurity professionals can use artificial intelligence to predict and prevent potential threats based on historical trends, which can increase the accuracy of their threat detection capabilities.

Machine learning AI models analyze large datasets from a variety of sources. Through this analysis of information from previous incident reports, security logs, network traffic patterns, and more, the cybersecurity teams can begin to identify trends and patterns, sharpening their understanding of common cyberthreats.

The AI systems can then use these trends to predict when and how future threats will occur and can set up automation as a preventative measure before a threat has even materialized.

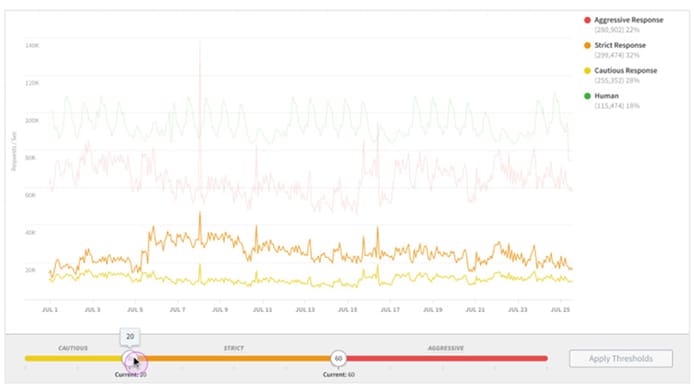

For example, Akamai Bot Manager uses an AI framework to monitor bot traffic and detect threats at the network edge, at scale and in real time (Figure 3).

Understanding limitations: When AI may not be the answer

Although AI offers powerful cybersecurity enhancements, it's important to remember its limitations.

AI systems learn solely from the data that they are given, and they lack the human ability to consider the wider context. Because of this, AI systems can struggle with decision-making in nuanced, complex situations. If an AI system is trained on incomplete or biased information, it will reflect that same bias or lack of context in its decision-making.

This lack of nuance can also cause AI systems to occasionally flag legitimate traffic as potential threats, leading to false positives. False positives can block legitimate users from completing transactions and cause organizations to lose out on business and diminish customer satisfaction.

Finally, since AI relies on large amounts of data to learn, data privacy and security are at risk. Without proper security measures in place, companies risk that attackers will gain unauthorized access to potentially large amounts of sensitive data, should a data breach occur.

The use of AI is only expected to grow across industries. In a recent McKinsey survey about the state of AI, 71% of respondents report that their organizations are regularly using generative AI in at least one business function, up from 65% last year and a significant jump from just 33% in 2023.

As AI systems become more advanced, they’re predicted to take on an even greater role in cyber defense in the coming years. AI is likely to bring further advancements in threat detection and mitigation, allowing cybersecurity teams to continually scale their efforts and react to threats faster.

Embracing AI for a more secure digital world

AI increasingly plays a crucial role in cyberattacks and cybersecurity. To respond to a continually expanding, highly sophisticated threat landscape, Akamai is at the forefront of strategically and transparently implementing AI to strengthen security.

Akamai’s AI-powered cybersecurity tools perform a variety of critical tasks. Innovative AI-imbued solutions like Akamai Hunt are enabling organizations to detect and respond to threats faster and more accurately than ever before. The Akamai Guardicore Platform blends cutting-edge AI with Akamai expertise to help customers achieve Zero Trust, reduce ransomware risk, and meet compliance requirements.

Increase your organization’s security posture with cybersecurity solutions that use AI responsibly and effectively.