As companies recognize the need to protect their high-value AI applications, they’re also worried about the cost of protecting those applications. Large language models (LLMs) are computationally expensive — and protecting them is expensive, too.

Similarly, as cloud costs have inflated with widespread adoption, firms fear that as they scale their AI apps, the increased use will lead to major hikes in the costs of their AI security solutions.

The good news is that there are ways you can insulate your AI app from unnecessary work and save yourself some money in the process.

Get comprehensive protection and cost savings

Akamai Firewall for AI protects AI-driven applications from prompt injections, adversarial queries, and unauthorized manipulations to help ensure that these apps don’t give out toxic content, personally identifiable information, and other proprietary company information.

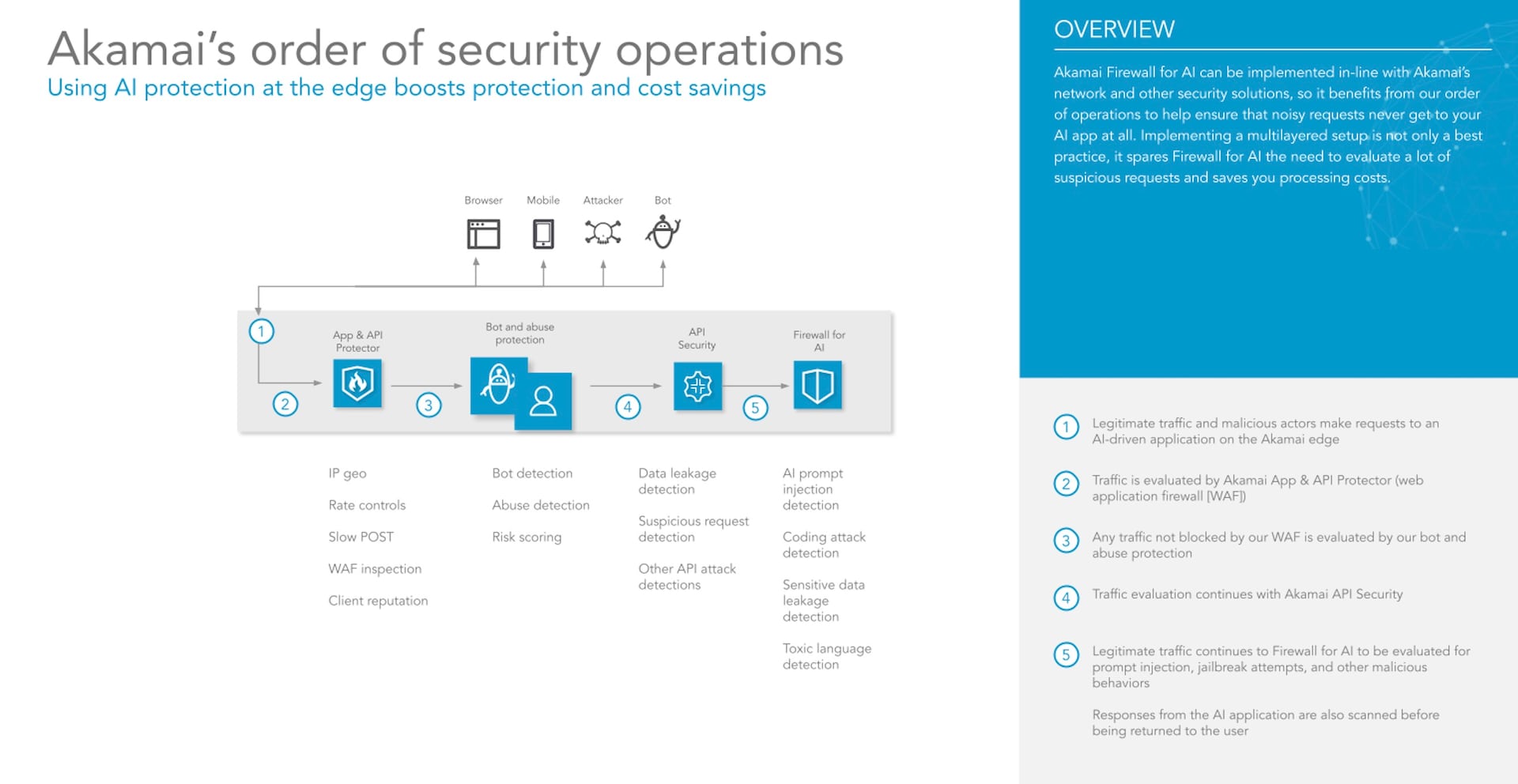

Additionally, Firewall for AI can be implemented in-line with Akamai’s network and other security solutions, so it benefits from our order of operations to help ensure that noisy requests never get to your AI app at all. Implementing a multilayered setup is not only a best practice, it spares Firewall for AI the need to evaluate a lot of suspicious requests and saves you processing costs.

To get both comprehensive protection and the cost savings that comes with reduced inputs, customers can integrate Firewall for AI protection alongside other Akamai security solutions that you may already use to protect your websites, web apps, and APIs.

Akamai’s order of security operations

How does this work? Our security products work in a specific order of operations to protect your AI app with best-in-class protection against traditional app threats, bot attacks, and other threats (Figure).

For example, bot management controls can deflect bots before they flood your AI app with prompt inputs.

Our layered protection model

It’s important to understand that although AI attacks are unique, you should not secure AI apps in isolation. Instead, use a layered model to help ensure that existing threats are addressed before they reach your AI app, and allow your AI protection to focus only on specific threats that impact language model interfaces.

This is the best way to:

- Lighten your AI app's processing load

- Allow Firewall for AI to concentrate only on requests that are risky to LLM apps

- Better cover your entire site's attack surface

- Save on AI compute and protection costs

Learn more

Visit our website to request a demo of Firewall for AI.

Tags