Service meshes and traditional API gateways have distinct roles in managing network traffic within a distributed system. Service meshes focus on internal communication between microservices, offering granular control over traffic management, load balancing, and observability without requiring changes to application code. In contrast, API gateways serve as centralized entry points for external clients, handling tasks such as authentication, authorization, and request routing for client-facing APIs.

A service mesh is a dedicated infrastructure layer that provides network connectivity, security, and observability for microservices within a distributed system. It works by abstracting away service-to-service communication complexities, such as load balancing, circuit breaking, traffic shifting, and retry mechanisms.

Defining a service mesh requires first defining microservices. If you don’t know how microservices work, then an explanation of a service mesh is like comparing flying first class vs. coach, but you’ve never heard of an airplane. A service mesh is a technology that was created to make microservices-based applications run better, though there are some that argue they often complicate things.

Understanding microservices

Microservices are a modern approach to software application architecture where an application is split into loosely coupled smaller components, known as “services.” Collectively, these microservices provide the overall application functionality. This approach stands in contrast to the traditional “monolithic” application architecture that combines all functionality into a single piece of software.

Netflix is a well-known example of microservices. A decade ago, Netflix was one unified and gigantic software application. Every feature of Netflix resided inside a single massive codebase. The problem with this was that modifying one part of the app meant redeploying the entire thing — not a desirable situation for a busy and commercially significant piece of software.

After migrating to a microservices architecture, each area of Netflix — from content management to account management, players, and so forth — exists as its own microservice. Actually, if we want to get really granular here, each one of these areas consists of multiple microservices. Developers can work on each microservice in isolation. They can change them, scale them, or reconfigure them without concern for their impact on other microservices. In theory, if one microservice fails, it does not bring the rest of the application down with it (though there are outlier scenarios).

What is a service mesh?

With a sense of microservices architecture in mind, consider some of the challenges that could emerge when trying to make a microservices-based application function reliably. The architecture, while revolutionary in its ability to separate applications into independent services, brings with it a number of difficulties.

Communication between microservices, in particular, can be problematic without some mechanism to ensure that microservices know where other microservices are, how to communicate, and how to give admins a sense of what’s happening inside the app. For example, how does the streaming microservice in Netflix know where to look to find information about a subscriber’s account? That’s where a service mesh comes into the picture. However, to appease the folks who aren’t fans of a service mesh, it’s critical to point out that you don’t strictly need a service mesh for this.

A service mesh is a layer of infrastructure that manages communications between microservices over a network. It controls requests for services inside the app. A service mesh also typically provides service discovery, failover, and load balancing, along with security features like encryption.

How does a service mesh work?

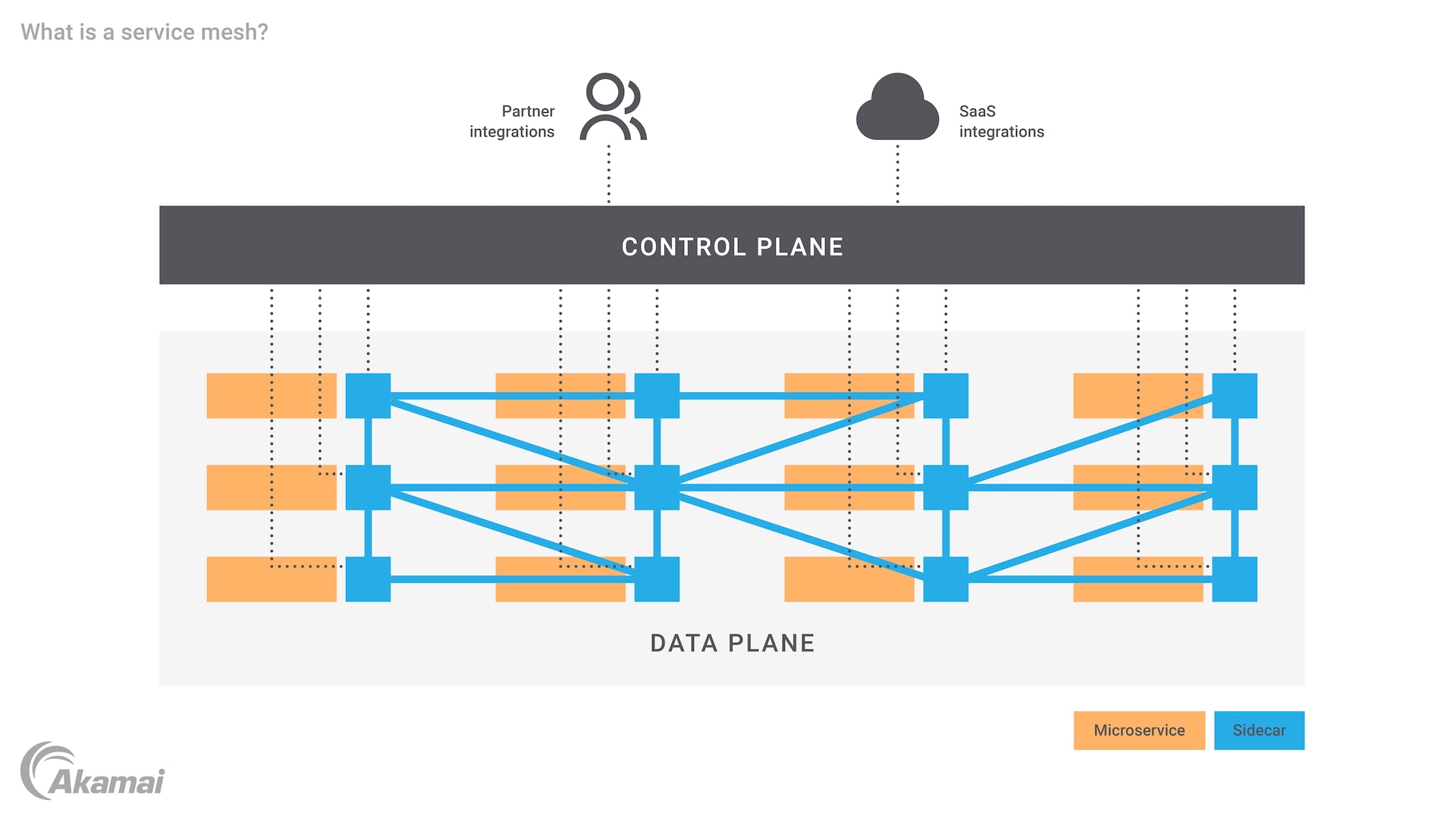

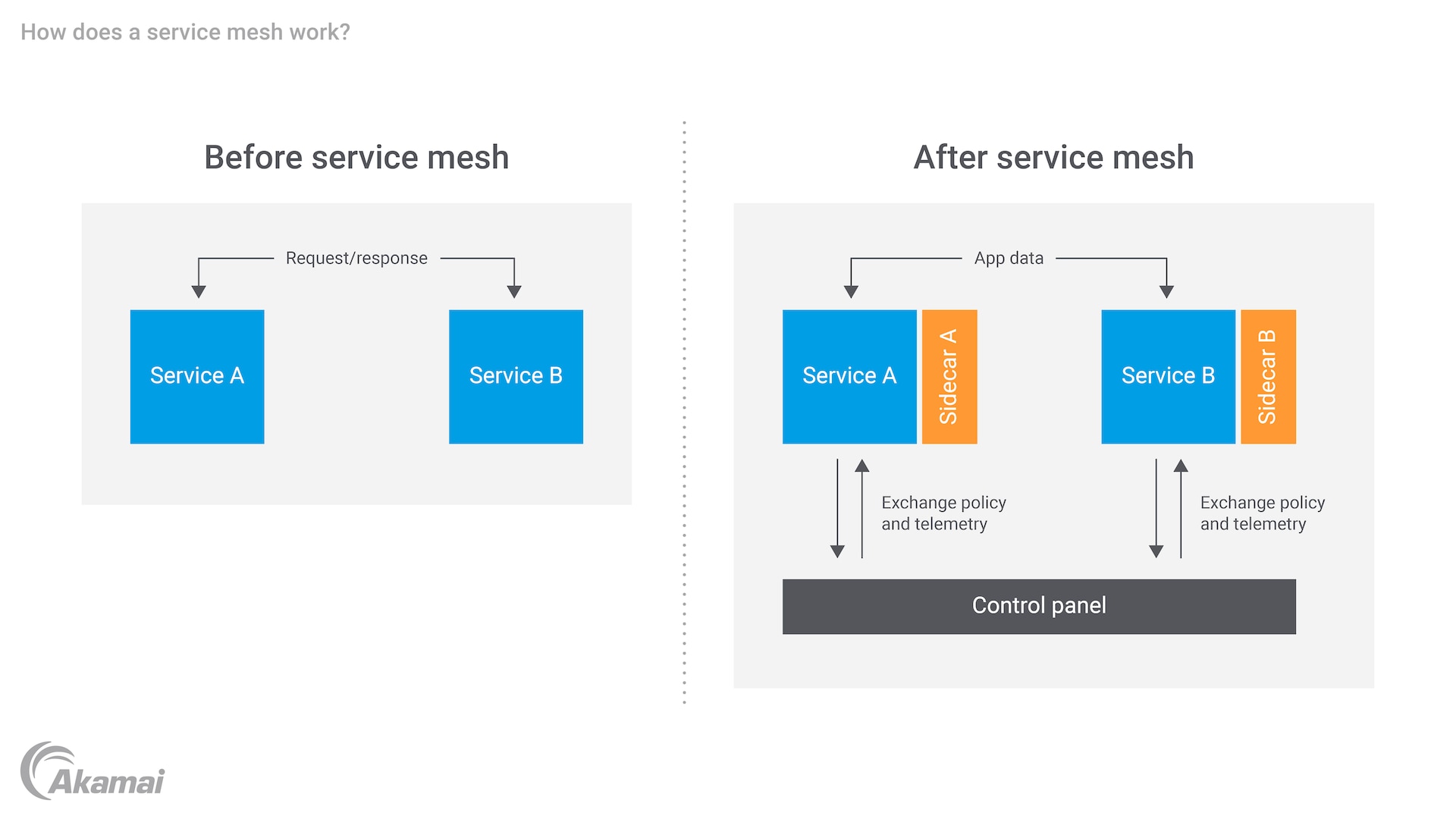

A service mesh’s job is to add security, observability, and reliability to a microservices-based system. It achieves this through the use of proxies known as “sidecars,” which attach to each microservice (there are “non-sidecar” service meshes based on eBPF, as well). Sidecars operate at Layer 7 of the OSI stack. If the application is container-based, the sidecar attaches to each container or virtual machine (VM). The proxies then operate in a “data plane” and “control plane.”

The data plane comprises services that run next to their sidecar proxies. For each service/sidecar pair, the service deals with the application’s business logic, while the proxy sits between the service and other services in the system. The sidecar proxy handles all traffic going to, and away from, the service. It also provides connection functionality such as mutual Transport Layer Security (mTLS), which lets each service in the request/response message flow validate the other’s certificate.

The control plane is where admins interact with the service mesh. It deals with proxy configuration and control, handling the administration of the service mesh, and providing a way to set up and coordinate the proxies. Admins work through the control plane to enforce access control policies and define routing rules for messages traveling between microservices. The control plane may also enable the export of logs and other data related to microservice observability.

Working together, the service mesh’s data plane and control plane make possible:

Security — distributing security policies, such as authentication and authorization, to microservices, and automatically encrypting communications between microservices. A service mesh can manage, monitor, and enforce security policies through its control plane.

Reliability — managing communications between microservices through sidecar proxies in the control plane, and improving the reliability of service requests in the process. The service mesh can also handle load balancing management of faults, all with the goal of bolstering reliability and delivering high availability (HA).

Observability — showing system owners how microservices are performing. A service mesh can reveal microservices that are “running hot” or at risk of failure. The service mesh’s control plane collects telemetry data regarding interactions between microservices and other application components.

Kubernetes and service mesh integration

Kubernetes, as a widely adopted container orchestration platform, plays a critical role in managing microservices. Integrating a service mesh with Kubernetes enhances the control, security, and observability of microservices running in the Kubernetes ecosystem. Kubernetes, combined with service meshes like Istio and Linkerd, allows for automatic service discovery, load balancing, and failure recovery. The open source nature of Kubernetes and service meshes means organizations can benefit from a broad community and a rich set of tools that continually evolve to support cloud native applications. By using Kubernetes as the foundation for container management and pairing it with a service mesh, organizations gain better visibility into service-to-service communication, reduce latency in service interactions, and improve overall system resilience.

Service mesh vs. microservices

It is easy to get confused between microservices and a service mesh. The microservices are the component parts of a microservices-based system. The service mesh can connect them. As the name suggests, the service mesh lies over the microservices like a connective fabric. Put another way, a service mesh is a “pattern” that can be implemented to manage the interconnections and relevant logic that drives a microservices-based application.

Why do you need a service mesh?

Monolithic applications do not do well as they grow larger and more complex. It starts to make sense to divide the application into microservices. This approach aligns well with modern application development methodologies like agile, DevOps, and continuous integration/continuous delivery (CI/CD). Software development teams and their partners in testing and operations can focus on new code for individual, independent microservices. This is usually best for the software and the overall business.

As the number of services in the microservices-based app expands, however, it can become challenging to keep up with all the connections. Stakeholders may struggle to track how each service needs to connect and interact with others. Monitoring service health gets difficult. With dozens or even hundreds of microservices to connect and oversee, reliability may become an issue as well.

Service mesh addresses these problems by allowing developers to handle service-to-service communications in a dedicated layer of the infrastructure. Instead of dealing with hundreds of connections, one at a time, developers can manage the entire application through proxies in the control plane. The service mesh provides efficient management and monitoring functionality.

In a nutshell, the core benefits of a service mesh include:

Simplifying communication between microservices

Making it easier to detect and understand communication errors

Increasing app security with encryption, authentication, and authorization

Accelerating app development through faster dev, test, and deployment of new microservices

Service mesh challenges

A service mesh can present its own difficulties, however. For example, the service mesh’s layers become another element of a system that requires infrastructure, maintenance, support, and so forth. They can be a resource drain, affecting overall network and hardware performance. Adding sidecar proxies can potentially inject more complexity into an already complex environment. Running service calls through the sidecar adds a step, which can potentially slow the application down. There can be problems related to integration between multiple microservices architectures. The network still needs management, the service mesh’s management and messages between services notwithstanding.

Reducing latency in service meshes with automation

One of the primary concerns with service meshes is the potential for increased latency due to the added layer of proxies that handle service communication. However, service meshes such as Istio and Linkerd come equipped with automation capabilities that mitigate these latency concerns. Through intelligent traffic management and automated retries, the service mesh can streamline communication pathways, ensuring minimal delays. Additionally, Istio uses Envoy to optimize routing decisions, allowing for faster responses between services by leveraging automation to route around potential network bottlenecks. This ensures that applications maintain high performance, even in large, cloud native environments where latency could otherwise be a significant issue. With continual updates and optimizations from the open source community, modern service meshes are increasingly able to balance advanced traffic management features with minimal overhead.

Conclusion

A service mesh can help you achieve success with microservices. The technology provides a much-needed layer of infrastructure to handle communications between services and related administrative functions. Implemented the right way, a service mesh provides security, reliability, and observability for microservices-based applications and systems.

Frequently Asked Questions

Service mesh architecture enhances security within microservices by providing a range of features that ensure secure communication and data protection. One key security feature is mutual TLS (mTLS), which enables encrypted communication between services. Additionally, service meshes enable fine-grained access control policies, allowing administrators to define and enforce access permissions at the service level.

Implementing a service mesh can initially seem complex, but the long-term benefits outweigh the initial complexity.

Choose a service mesh solution that aligns with your requirements and infrastructure. Each service mesh has its own features and integrations, so evaluate them based on your specific needs. Begin with a basic setup by deploying the service mesh in a non-production environment or on a limited subset of microservices. Define access control policies, traffic routing rules, and application security configurations based on your organization’s requirements.

Gradually roll out the service mesh to additional microservices and environments as you gain confidence in its stability and performance. Continuously iterate and improve your service mesh setup based on feedback, performance metrics, and evolving requirements.

Here are some factors to consider when deciding on the best service mesh for your needs:

Community support: Look for service meshes with active community support that can provide valuable resources, documentation, and community-driven contributions.

Ease of use: Choose a solution that offers intuitive tooling, clear documentation, and user-friendly interfaces to simplify adoption and ongoing maintenance.

Feature set: Evaluate the features of each service mesh and assess whether it aligns with your requirements. Look for traffic management capabilities, observability tools, and security features.

Compatibility: Ensure the service mesh is compatible with your existing infrastructure, including container orchestration platforms and cloud environments.

Performance and scalability: Choose a solution that can handle your anticipated workload and scale with your infrastructure growth.

Why customers choose Akamai

Akamai is the cybersecurity and cloud computing company that powers and protects business online. Our market-leading security solutions, superior threat intelligence, and global operations team provide defense in depth to safeguard enterprise data and applications everywhere. Akamai’s full-stack cloud computing solutions deliver performance and affordability on the world’s most distributed platform. Global enterprises trust Akamai to provide the industry-leading reliability, scale, and expertise they need to grow their business with confidence.